Our Technology

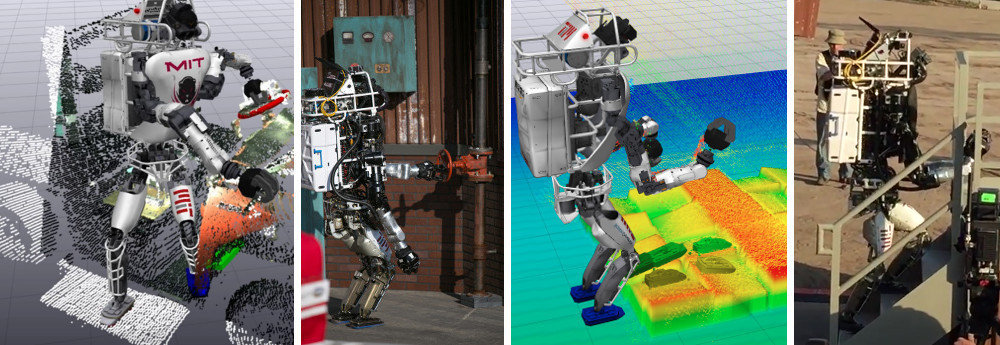

The challenges presented by the DARPA Robotics Challenge require new approaches to high dimensional planning, stable locomotion combined with suitable perception and user interaction tools to enable effective co-operation between the human and the robot. Here we present an overview of the system design and technology developed by our team.

We have developed our own tools for planning, controls, simulation, and visualization. Much of the software developed by Team MIT has been integrated into the Drake toolbox: http://drake.mit.edu and is freely available to the world.

Lots of videos

Our up-to-date content is available on our YouTube channel more quickly than we update this page. Check it out at http://youtube.com/mitdrc

Planning

To execute complex tasks in the real world, our robot needs tools to plan and reason about movements through its environment. Standing, walking, and manipulating objects requires the ability to generate sequences of motions which can be safely executed. We create planning tools which will automatically respect the robot’s physical limits where possible, and our human operator can review future robot actions to verify their safety.

Manipulation Planning

A central component of our manipulation system is a powerful inverse kinematics (IK) engine. At its heart, the IK engine computes the necessary robot joint angles to achieve some real-world objectives, such as positioning the robot’s hand at a given point in space, as shown below:

However, IK extends far beyond single hand poses. In this video, the planner computes an entire trajectory through space which brings the hand to its goal while maintaining balance of the robot and avoiding collisions with the world:

The IK engine is implemented as a nonlinear optimization problem and uses the high-performance SNOPT tools to efficiently compute locally optimal solutions. More detail can be found in [7].

Footstep Planning

In order to cross difficult terrain, or even to cross a room without tripping over itself, our robot must be able to decide where to place its feet. We have developed several new algorithms for footstep planning. Our earlier work used a nonlinear optimization to plan footsteps on flat ground, as described in [7].

More recently, we have developed a mixed-integer convex optimization for footstep planning on a wide variety of terrains. Using our IRIS algorithm [3] we can compute large convex regions of safe terrain. These regions provide the input to a mixed-integer optimization which chooses the number of footsteps to take and their locations on the terrain in a globally optimal manner. More detail can be found in [5]. The footstep planner is demonstrated on a few simple terrains in the video below:

Controls

All the planning in the world is of no use without the ability to execute planned motions on the robot. We use a custom controller based on an efficiently-solvable quadratic program (QP) to compute desired joint torques in real time as the robot moves. Balancing, walking, and manipulation are all accomplished with the same core controller. More information about our QP controller can be found in [2] and [8].

Our controller uses an accurate model of the robot’s entire body, allowing it to balance in a wide variety of postures…

…and to remain balanced with significant unmodeled disturbances:

The QP controller also allows Atlas to walk quickly and precisely on a variety of terrains:

Since our controller is based on a model of the robot, applying it to new hardware only requires an update to the model. Here we show the new Atlas after its recent upgrade by BDI. We were able to rapidly apply our QP controller to the new hardware, even though its joint configurations and mass distribution were dramatically different from the prior Atlas version:

State Estimation

Precise execution of stable motions requires the robot to have accurate information about its current posture and location within the world. To that end, we have developed a new state estimation tool which combines information from the robot’s joint position sensors, its onboard accelerometer and gyroscopes, and its laser rangefinder to determine the robot’s state with little or no drift. This enables accurate, repeatable foot placement, and even allows the robot to accurately track its state without any contact with the ground, as shown below:

More details about our state estimator can be found in [4], and source code is available from https://github.com/mitdrc/pronto.

Perception and Visualization

Our custom user interface integrates information from the robot’s state estimator, the laser scanner, and the robot’s onboard cameras and force sensors to give the human operator a detailed view of the world. The operator can request heightmaps and plan footsteps, as shown in this video; manipulate the robot’s end effectors, as shown here, and make complex plans to manipulate objects in the environment, seen here.

Putting it all Together: Autonomy and Integration

Our recent developments in planning, sensing, and controls have given Atlas new capabilities in a variety of tasks. The IK engine and manipulation planners allow us to generate balanced reaching motions:

Combining the IK tool with the footstep planner, state estimator, whole-body controller, and perception system lets Atlas autonomously seek out an object on the ground, move to it, and pick it up repeatedly:

More complex behaviors can also be executed using our tools. In the following video, the human operator indicates the location of a tabletop in our user interface. The perception tools automatically identify objects on the table, and our planning tools allow the robot to plan motions to pick up one or more objects, place them in a bin, and then return to the table and repeat the task. Here we show the robot’s planned sequence of motions, demonstrating whole-body manipulation and walking planning:

And here we show the execution of the autonomous pick-and-place behavior on the robot:

Like the controller, our planning tools rely only on a general model of the robot. As a result, we can plan motions and footsteps for a wide variety of robots. In the following video we show the same planning sequence envisaged using the NASA Valkyrie Robot:

In this video the robot executes one of the DRC tasks–turning a valve and lever–without any interaction from our operator:

Finally, in this video we combine the footstep planner described in [5] with an automatic perception tool capable of identifying regions of safe terrain as the robot walks. From the robot’s laser scanner or stereo cameras, we continuously build a map of the world, identify safe terrain, and plan footsteps to keep moving forward. This allows continuous locomotion on unmodeled terrain with no input needed from the human operator:

Publications

- A summary of Team MIT's approach to the Virtual Robotics Challenge, R. Tedrake, M. Fallon, S. Karumanchi, S. Kuindersma, M. Antone, T. Schneider, T. Howard, M. Walter, H. Dai, R. Deits, M. Fleder, D. Fourie, R. Hammoud, S. Hemachandra, P. Ilardi, C. Perez-D'Arpino, S. Pillai, A. Valenzuela, C. Cantu, C. Dolan, I. Evans, S. Jorgensen, J. Kristeller, J. A. Shah, K. Iagnemma, and S. Teller. ICRA. May 2014.

- An efficiently solvable quadratic program for stabilizing dynamic locomotion, S. Kuindersma, F. Permenter, R. Tedrake. ICRA. May 2014.

- Computing large convex regions of obstacle-free space through semidefinite programming, R. Deits, R. Tedrake. WAFR. August 2014.

- Drift-Free Humanoid State Estimation fusing Kinematic, Inertial and LIDAR sensing, M. Fallon, M. Antone, N. Roy, S. Teller. Humanoids. November 2014.

- Footstep Planning on Uneven Terrain with Mixed-Integer Convex Optimization, R. Deits and R. Tedrake. Humanoids. November 2014.

- Whole-body Motion Planning with Simple Dynamics and Full Kinematics, H. Dai, A. Valenzuela, R. Tedrake. Humanoids. November 2014.

- An Architecture for Online Affordance-based Perception and Whole-body Planning, M. Fallon, S. Kuindersma, S. Karumanchi, M. Antone, T. Schneider, H. Dai, C Perez D'Arpino, R. Deits, M. DiCicco, D. Fourie, T. Koolen, P. Marion, M. Posa, A. Valenzuela, K. T. Yu, J. Shah, K. Iagnemma, R. Tedrake, S. Teller. Journal of Field Robotics 2015. PDF

- Optimization-based locomotion planning, estimation, and control design for Atlas, S, Kuindersma, R. Deits, M. Fallon, A. Valenzuela, H. Dai, F. Permenter, T. Koolen, P. Marion, R. Tedrake. Autonomous Robotics. 2015.